With reference to another article i wrote earlier on how to run graylog cluster in kubenetes here (

http://beeyeas.blogspot.com/2017/02/how-to-run-graylog-cluster-in-kubernetes.html) , it was the way to run graylog ( mongo, elastic and graylog server) to-gether in one single container instance in minikube.

Here i take another attempt to run micro-services container of mongodb, elastic-search and graylog2 in google cloud platform - google container engine( GKE )

Step1 : See here (

http://beeyeas.blogspot.com/2017/02/gke-google-container-engine.html) , how to bring up GKE ( kubenetes instance )

I assume, you followed the instructions here correctly and have "kubectl" CLI configured correctly to point to your gcloud GKE instance.

Make sure you can access kubenetes dashboard , for which you have to proxy service

#kubectl proxy

Step 2 : get the kubenetes service and deployment files here ( https://github.com/beeyeas/graylog-kube ), clone repo

Step 3 : Create mongo, elasticsearch, graylog

#kubectl create -f mongo-service.yaml,mongo-deployment.yaml

#kubectl create -f elasticsearch-deployment.yaml,elasticsearch-service.yaml

#kubectl create -f graylog-deployment.yaml,graylog-service.yaml

Step 4 : forward graylog UI to local 19000 port

#kubectl port-forward graylog-2041601814-5qnbc 9000:9000

Step 5 : Verify if all services are up

#kubectl get services

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch None <none> 55555/TCP 1h

graylog 10.3.248.24 <none> 9000/TCP,12201/TCP 1h

kubernetes 10.3.240.1 <none> 443/TCP 1d

mongo None <none> 55555/TCP 1h

NOTE: Access localhost:9000 for graylog UI

Step 6 : Graylog UI is empty and do not have any logs to index or show , i have exposed port number 12201. Create a port forward for graylog input GELF HTTP

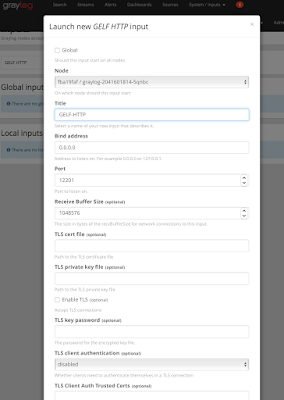

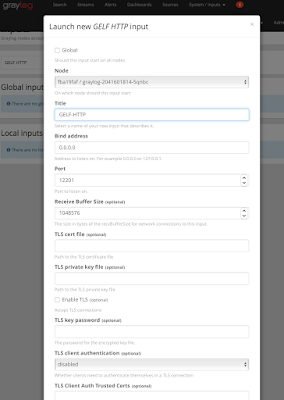

Make sure you got this screen configured in graylog, configurig GELF-HTTP in graylog

#kubectl port-forward graylog-2041601814-5qnbc 12201:12201

#kubectl port-forward graylog-2041601814-5qnbc 12201:12201

Step 7 : Now we can pump some test log statements into graylog from localhost

for i in {1..100000}; do curl -XPOST 127.0.0.1:12201/gelf -p0 -d '{"short_message":"Hello there", "host":"example.org", "facility":"test", "_foo":"bar"}'; done

After step 7 where you pump the logs, see if logs are showing up in the graylog UI